Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Paged Attention and vLLM

Published:

Paged Attention is a memory optimization on which the vLLM Inference Engine is based. Here is a summary of the paper on paged attention and the key features of vLLM that make it so powerful.

Are Autoencoders Fundamentally Denoisers?

Published:

The core idea behind Autoencoders is to bottleneck information flow so that the DNN is forced to prioritize what information to propagate to the next layer (by restricting the number of dimensions in the latent space). In this project, I explore how this can be a useful denoising tool.

Einops and Einsum Summarized

Published:

A brief summary on einops and einsum, usage documentation and an implementation of Average Pooling in CNNs using einops (inspired from the max pooling layer implemented in the original library documentation).

Implementing GPT from Scratch

Published:

This article contains a conceptual explanation, necessary for building a language model from scratch, using the decoder-only transformer architecture. It is based on Andrej’s Karpathys GPT from scratch. The code for this conceptual guide can be found here.

Review: Interpretability in the Wild: A Circuit for Indirect Object Detection in GPT2-Small

Published:

A paper review highlighting the key discoveries with respect to attention heads and the algorithms used.

Review: A Mathematical Framework for Transformer Circuits

Published:

This paper provides a mental model for reasoning about the internal workings of transformers and attention heads in deep neural networks. The insights here help understand and analyze the behaviors of large models.

portfolio

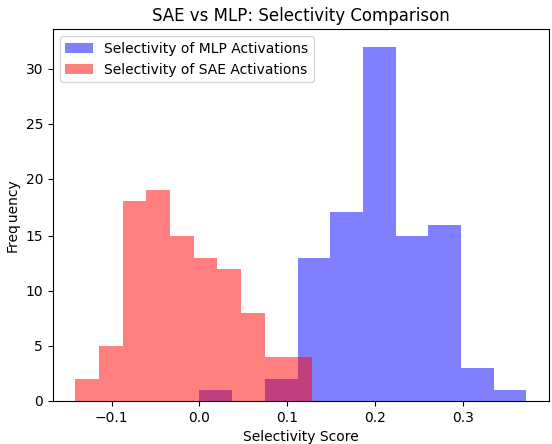

Predicting AI bias using SAEs

Published:

A comparative analysis of how Sparse Autoencoders and MLP activations encode gender information inside LLMs.

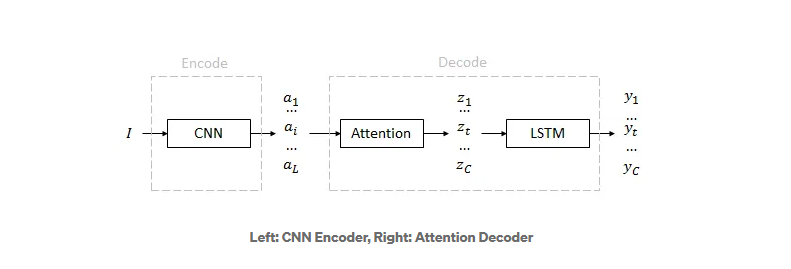

Automated Image Captioning

Published:

Using Attention to predict image captions with greater accuracy.

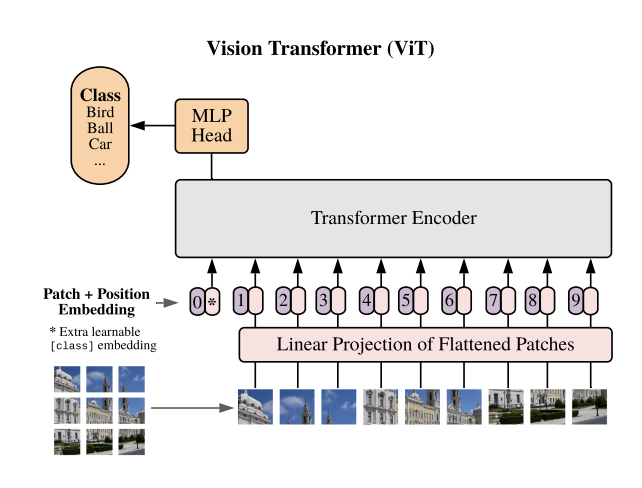

Transfer Learning for Image Classification

Published:

Fine-tuning ViT and ResNet for image classification on Google Cloud.

Automatic Summarization of Job Description with LLMs

Published:

A workflow to fetch and summarize job descriptions with Llama 3-70B.

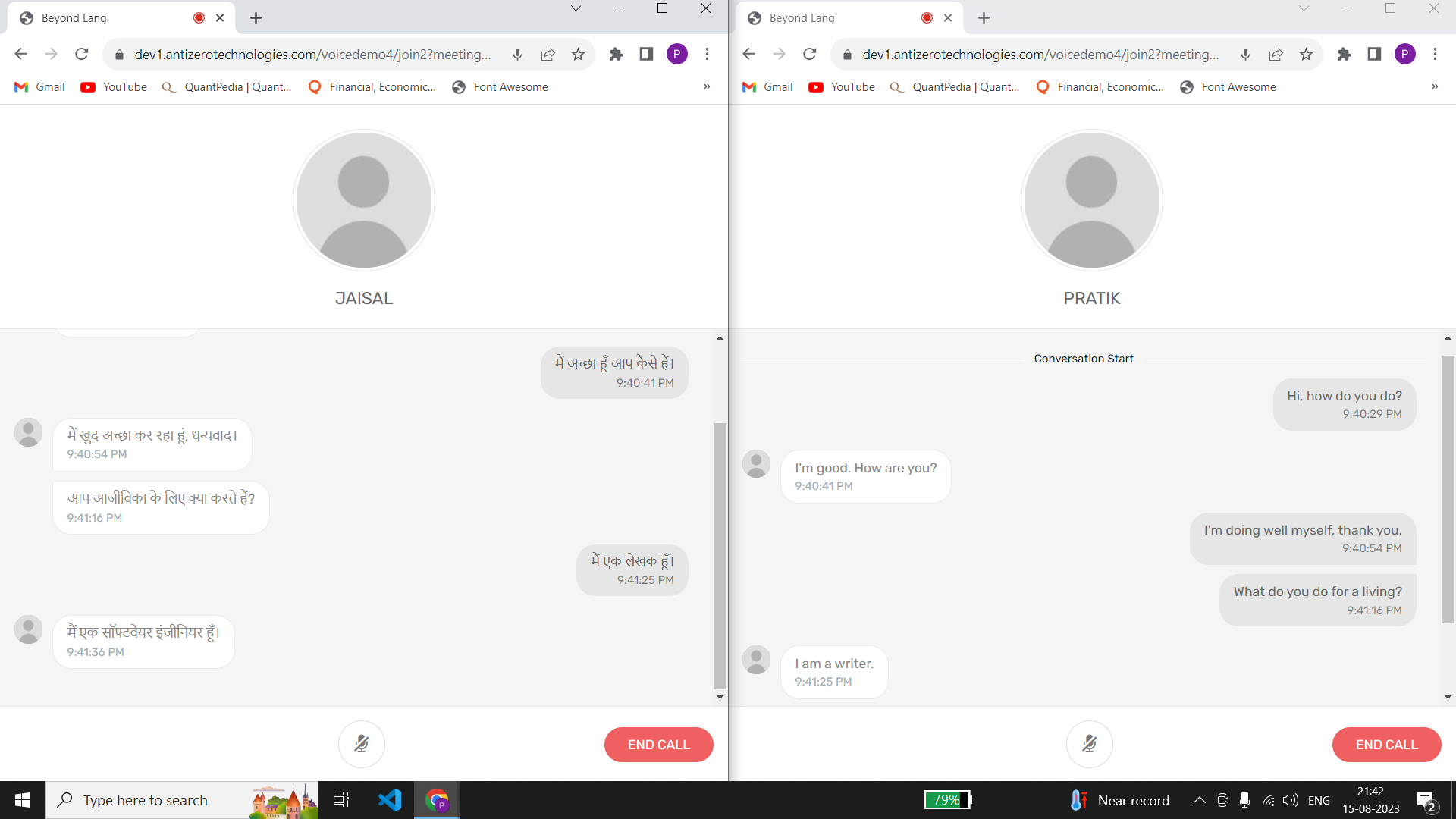

Beyond Lang

Published:

Voice calling with real-time speech translation and transcription.

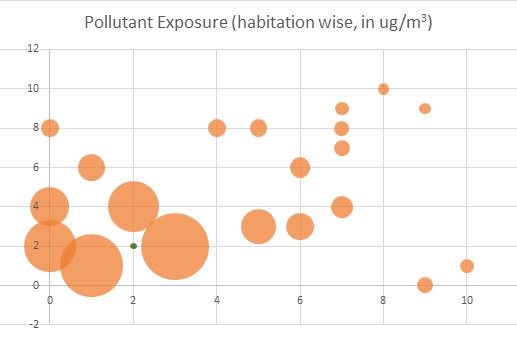

Minimizing Power Plant Externalities

Published:

Using simulations to optimize power plant location for minimal externality.

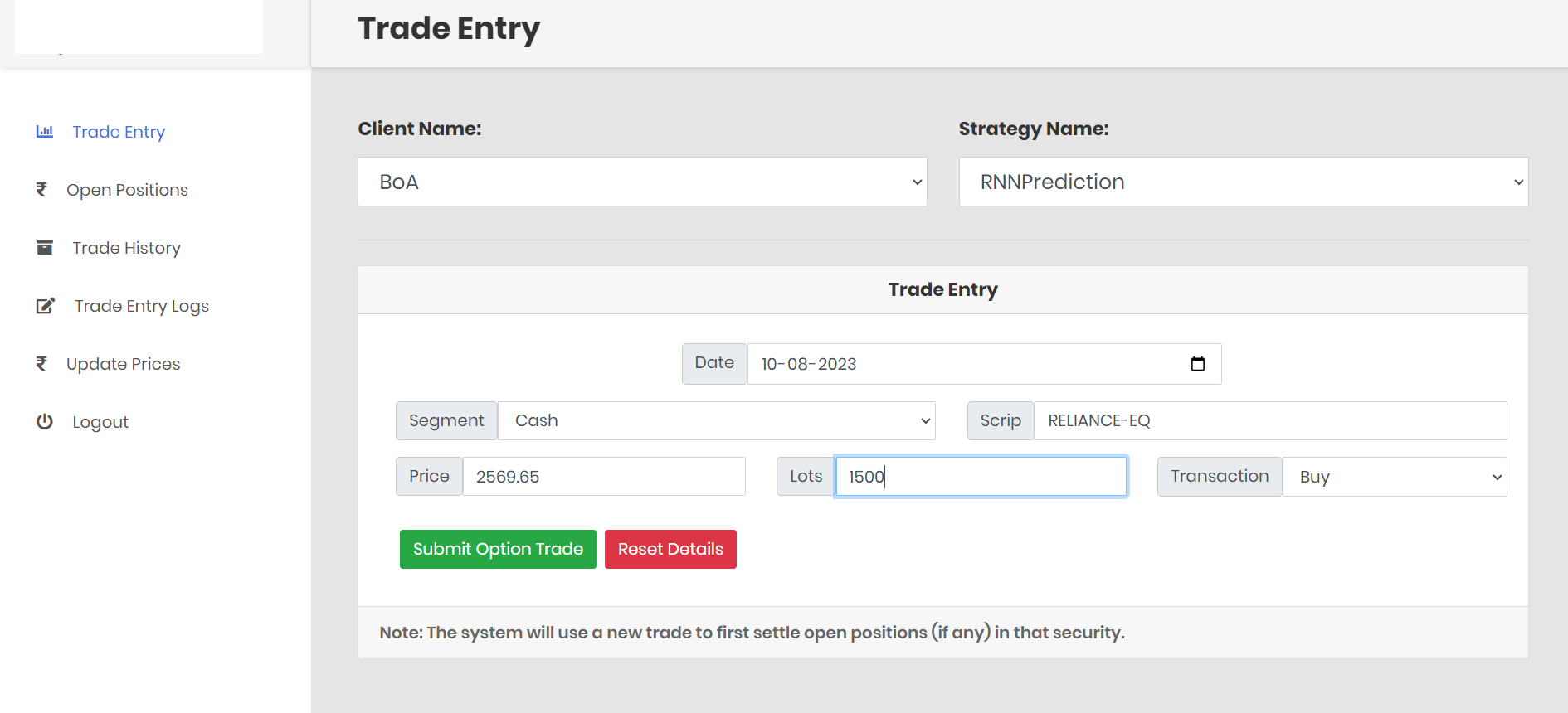

Web-based Accounting System

Published:

One-stop shop for managning and reconciling financial trades.

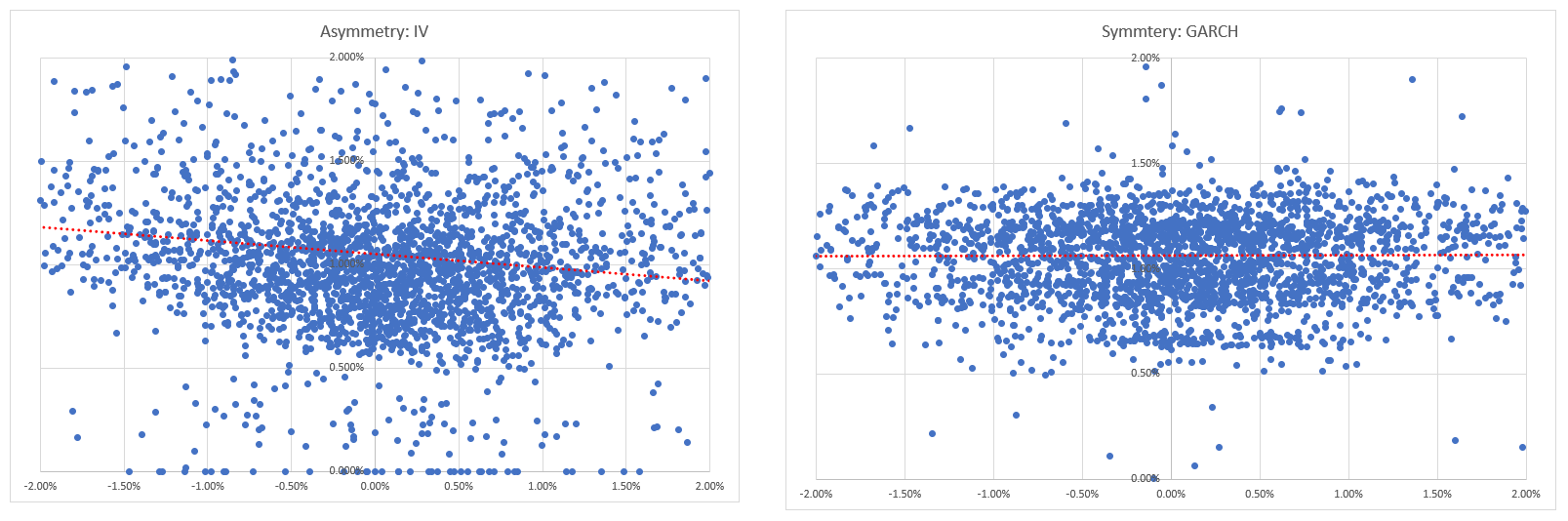

Volatility Prediction in Financial Markets

Published:

Using an ensemble of GARCH to predict financial volatility with greater accuracy.

publications

Applications of Operations Research in Minimizing Emission related Externalities of Power Plants

Published in International Journal of Scientific Research and Engineering Development, 2019

Minimizing Toxic Exposure from Power Plants

Recommended citation: Doshi P et al. (2019). "Applications of operations research in minimizing emission related externalities of power plants." International Journal of Scientific Research and Engineering Development.

Download Paper

talks

Talk 1 on Relevant Topic in Your Field

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Conference Proceeding talk 3 on Relevant Topic in Your Field

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

teaching

Teaching experience 1

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Teaching experience 2

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.